Reviews:

Babeltech (25 games benchmarked)

Clubic (French language)

Gamers Nexus

Gamespot

Gamestar (German language)

Guru 3D

HardOCP

Hardware Canucks

Hardware Zone

Hexus.net

Hot Hardware

Legitreviews

PC Gamer

PCper

PCworld

Polygon

Sweclockers (Swedish language)

Techspot

Toms Hardware

Tweaktown

WCCFtech

SLI Results:

Hardwarezone

Video reviews:

Gamers Nexus

Hardware Unboxed

Hardware unboxed overclocking

Linus Tech Tips

PCper

Pretty solid results and power consumption at stock clocks is nice

![thumb.gif thumb.gif]()

SLI Video Reviews:

Gamers Nexus

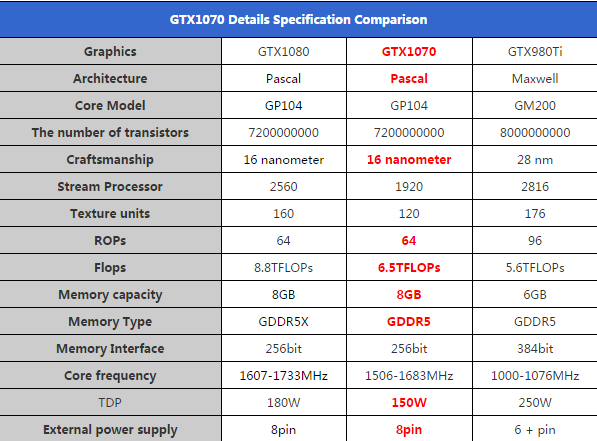

Specs:

Power consumption:

Battlefield 4 results:

Bioshock Infinite results:

Ashes of Singularity results:

Overclocking:

Overclocking result (Ashes of Singularity):

Babeltech (25 games benchmarked)

Clubic (French language)

Gamers Nexus

Gamespot

Gamestar (German language)

Guru 3D

HardOCP

Hardware Canucks

Hardware Zone

Hexus.net

Hot Hardware

Legitreviews

PC Gamer

PCper

PCworld

Polygon

Sweclockers (Swedish language)

Techspot

Toms Hardware

Tweaktown

WCCFtech

SLI Results:

Hardwarezone

Video reviews:

Gamers Nexus

Hardware Unboxed

Hardware unboxed overclocking

Linus Tech Tips

PCper

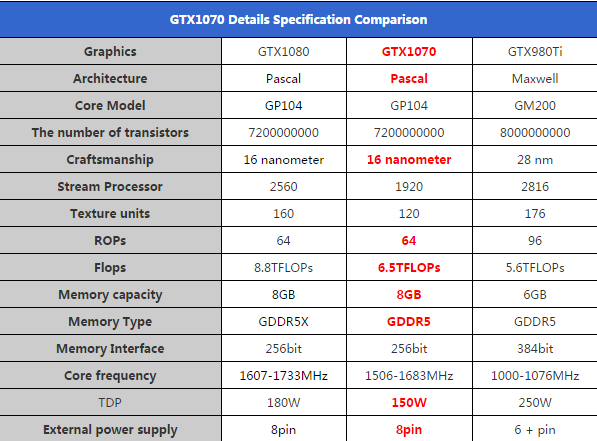

Pretty solid results and power consumption at stock clocks is nice

SLI Video Reviews:

Gamers Nexus

Specs:

Power consumption:

Battlefield 4 results:

Bioshock Infinite results:

Ashes of Singularity results:

Overclocking:

Overclocking result (Ashes of Singularity):